The 1950s weren’t just about Elvis and diner jukeboxes; there was a different kind of revolution brewing inside IBM’s walls. John Backus and his team were carving out the future of programming with Fortran for the 704 mainframe. This wasn’t just any high-level programming language; this was the frontier. Though its initial compiler implementation limited arrays to a modest three dimensions1, back then, it was more than enough for physicists and engineers, especially considering the alternative was writing raw 704 assembly instructions.

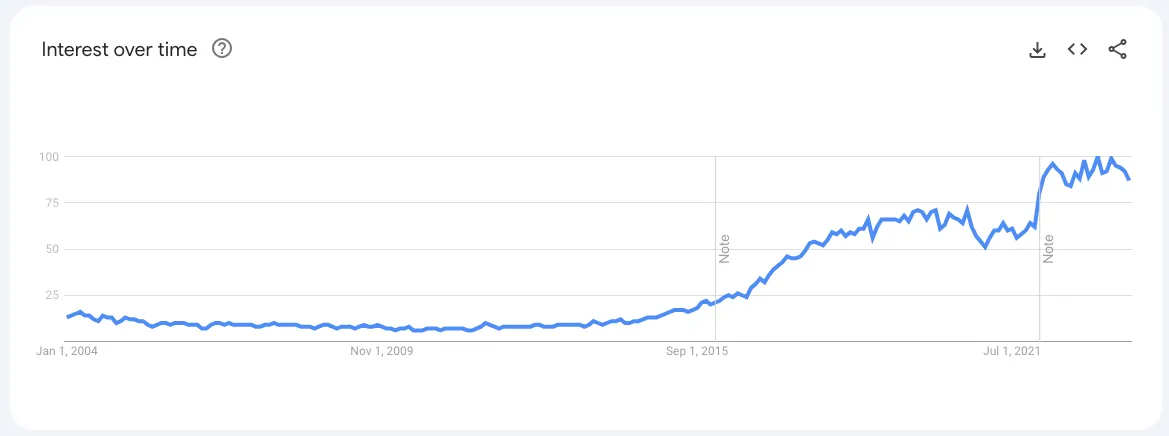

Over the next decade a big bang of new languages were being formed, but some certainly stood out amongst the rest. Ken Iverson and Adin Falkoff were christening the new APL language which made use of Iverson’s notation as a tool for thought. APL was transformative: It promised (and delivered) a reduction in tedious iteration constructs by presenting a new, streamlined way to engage with arrays.2 This approach was so captivating to some that it left an indelible mark, spawing an entire category of languages called Iversonian languages, with some prominent members of the likes of K, Q, J and BQN.3

I could wax poetically about history all day, but I hope it can be taken on good faith now that array languages aren’t mere footnotes in the big book of programming languages. Since their inception, they’ve shaped, influenced, and pushed the boundaries of how we express our intentions to machines. Yet, despite their prowess in many regards, array languages can be unbearably annoying to work with.

The literature in this field isn’t exactly the most welcoming, and with the unwavering need for venture capitalists to fund anything that so much as utters the words “Large Language Model” I figured it’s time for a more friendly soirée into the soft underbelly of the machinery powering AI.

ND-Arrays: the What, How and Why

We should first get everybody up to speed on some vocabulary. An ND-Array is a

special type (pun intended) of generic container. Under the Von Neumann model

architecture of computers, a program’s memory is laid out as an infinitely long

sequential tape of binary values (0s and 1s). Operating Systems allow you to

interface with memory through addresses, typically represented in base 16 (i.e.

0x7ffe454c08cc). So in order to store a multi-dimensional math structure in a

computer you would need to marshal the elements into some type of ordering in

memory, then correctly map the multi-dimensional, mathematical indices to

corresponding memory addresses.

It’s this storage and retrieval of multi-dimensional data from a flat piece of memory that we abstract into what’s called the ND-Array. Don’t forget however that this abstraction is heavily used by non-engineers: Physicists, Biologists, Mathematicians and the like, so anything that can make the code simpler is practically vital for any sort of adoption. Some cornerstone extensions to the abstraction have been implicit operator broadcasting and leading axis iterators.

Arguably the most important feature of this abstraction is the compilers ability to target multiple backends, and doing so efficiently: Making use of SIMD and AVX instructions on X86, the Neon instructions on ARM, Intel’s MKL libraries, NVidia’s CUDA, Vulkan, and MPI (for those supercomputer users).

The combination of all these features, plus the ability to write functions that act on these multi-dimensional arrays, is the total package of “stuff” required to build out a functional Domain-Specific Language (DSL).

Modern Fumblings of a Humble Programmer

Everyone makes mistakes, right? Well, I certainly do; the following are sample frustrations I’ve had over the years that may or may not have been the root causes of production bugs by yours truely.

The One About Referential Transparency

If you’ve ever worked with matrices before you’ve likely tried multiplying them

together. In NumPy you can do it pretty easily using the overloaded @

operator:

x = np.outer(np.arange(0, 10), np.arange(0, 5))

y = np.outer(np.arange(0, 6), np.arange(0, 10))

z = x @ yThe matrices x and y were constructed by taking the outer product of 2

vectors, which lets us see in the code the sizes of each variable’s dimensions.

If you tried running this code, you get this:

ValueError: matmul: Input operand 1 has a mismatch in its core dimension 0, with gufunc signature (n?,k),(k,m?)->(n?,m?)With a careful eye you can spot the issue - matrix multiplication implicitly

relies on the contracted dimensions to match in length. In this example it means

the columnsize of x (which is 5) should match the rowsize of y (which is 6).

This little ‘oopsie’ can seem harmless but unless you catch that ValueError

exception it’s going to crash your process, yikes…

And before you ask, no, linters and unit tests can’t really catch this issue, because a) no API contracts have been broken and b) the sizes of the arrays are only known at run-time and can vary depending on the incoming data. Don’t believe me? Look at the docstring of the function itself which, mind you, isn’t even typed hinted:

matmul(x1, x2)

Matrix product of two arrays.

Parameters

----------

x1, x2 : array_like

Input arrays, scalars not allowed.

Returns

-------

y : ndarray

The matrix product of the inputs.

This is a scalar only when both x1, x2 are 1-d vectors.So we’re blocked at the API level when it comes to improving the powers of the linter to catch issues. And F.Y.I., this issue isn’t just in NumPy; all DSLs embeded in Python will have this problem, including modern hits such as Jax and Taichi.

The One About Reusability

Let’s freshen things up by switching to Fortran for this example. Here we have a

subroutine that computes the inverse L2 distances between any 2 pairs of vectors

from A and B4:

subroutine coulomb_matrix(A, B, C)

real(dp), intent(in) :: A(:, :)

real(dp), intent(in) :: B(:, :)

real(dp), intent(out) :: C(:, :)

integer :: i, j

do i = 1, size(C, 2)

do j = 1, size(C, 1)

C(j, i) = 1.0_dp / norm2(A(:, j) - B(:, i))

end do

end do

end subroutine coulomb_matrixIf you’re already comfortable with array programming you could probably parse

this subroutine effortlessly, but some deep-rooted assumptions are baked right

into the surface. For example, take a closer look at the indexing sequence;

notice how it’s walking along the array from leftmost index to rightmost index?

That’s because it’s assuming that A, B and C were all allocated and

initialized from within Fortran itself, which follows left-aligned mapping (i.e.

column-major) order.

While we’re on the subject of indexing, notice the use of j to index the first

rank of C and the second rank of A. Likewise, we use i to index the

second rank of C and the second rank of B. So in order to avoid a runtime

error we must be certain that size(C, 1) == size(A, 2) and

size(C, 2) == size(B, 2). Something slightly more subtle about the code is the

broadcast the - operator across 2 slices of data, which will only work if the

2 slices have matching shapes. So we also get a new restriction that

size(A, 1) == size(B, 1). Oh, and just to round it all out, we also explicitly

initialize i and j to be 1, not 0, because Fortran was a tool for

mathematicians.

While this code is most certainly hardware-agnostic (and thankfully not written in IBM’s 704 assembly), it is most certainly not language-agnostic, nor even runtime-agnostic. And even though this simple example could easily fit in a blog post, any serious endeavour into the language commands a careful eye and nuanced understanding of the computing model. It could even be argued that Fortran is falling short of its namesake goal of abstracting mathematical functions from their instruction-set implementation.

And just to prove my point, here’s an semantically-equivalent version of the previous subroutine. How quickly does it take you to spot the difference?

subroutine coulomb_matrix(A, B, C)

real(dp), intent(in) :: A(:, :)

real(dp), intent(in) :: B(:, :)

real(dp), intent(out) :: C(:, :)

integer :: i, j

do i = 1, size(C, 1)

do j = 1, size(C, 2)

C(i, j) = 1.0_dp / norm2(A(:, i) - B(:, j))

end do

end do

end subroutine coulomb_matrixSimple equivalence transformations to the implementation like this could be the difference between achieving 500 time steps through your simulation instead of 50,000. It’s bugs like these - the kind that are nicely tucked away in the nuanced crevices - that give engineers the cold sweats.

Embedding ND-Arrays Into a Programming Language

If you were so inclined as to create your own construct for ND-Arrays, you might research how other people have embedded programming languages. While there’s a lot of overlap in their different interfaces, the contrast is deep where they differ.

ND-Arrays as Class Instances

The first (and simplest) approach would be to use object-oriented classes. We can define a superclass of which all arrays would be a subtype of. A simple example can be found in the NumPy source code. NumPy arrays are (roughly) defined as a struct like so:

typedef struct {

size_t dims[MAX_DIMENSIONS];

int data_type;

char *data;

// More bookkeeping data...

} ndarray;This methodology is by far the worst typed. The main issue here is that the dimensionality of the array is conveyed as a runtime value stored adjacent to the pointer. Even though this makes perfect sense from an implementation standpoint, and requires absolutely no language support beyond basic classes, this method provides absolutely no abstraction between the user and the implementation (and no, making these private members wouldn’t act as some magical fix).

There is a way to keep the class implementation while still exposing the array’s rank and shape through the type system. For example, in C++ we can use what’s called Variadic Generics, where we define a base class and a recursive class which deals with a single dimension at a time. Here’s a very simple example where we simply print the dimensions out:

// Base case for the template, representing a 0-dimensional array (a scalar)

template<int... Dims>

class MultiDimArray {

public:

static constexpr size_t Rank = 0;

static void printShape() {

std::cout << "Scalar" << std::endl;

}

};

// Recursive variadic template class for N-dimensional arrays

template<int FirstDim, int... OtherDims>

class MultiDimArray<FirstDim, OtherDims...> : public MultiDimArray<OtherDims...> {

public:

// The size of the first dimension

static constexpr int Size = FirstDim;

// The rank (number of dimensions) of the array

static constexpr size_t Rank = 1 + MultiDimArray<OtherDims...>::Rank;

static void printShape() {

std::cout << FirstDim;

if constexpr (Rank > 1) {

std::cout << " x ";

MultiDimArray<OtherDims...>::printShape();

} else {

std::cout << std::endl;

}

}

};I don’t know about you, but this feels very… clunky, to me. Recursion just feels like the wrong tool from the toolbox. Most DSL engines use the array shape information wholistically in order to dispatch compute in the most effective way possible. It’s certainly possible to go about it this way, but now you’re adding a lot of compiler complexity, not to mention these templates need to know the sizes at compile time, which means you can’t use this vocabulary in any of your APIs if you want your users to be allowed to create dynamically allocated arrays.

ND-Arrays as Pure Functions

One of the most interesting papers I’ve recently read was Google Research’s

experimental Dex language. The main premise

behind the lexicon is to treat ND arrays similarly to (curried) pure functions.

While this idea isn’t exactly new in the literature, its presence in a language

already functional and ergonomic makes the idea flourish5.

For example, to represent a rank-2 array with sizes n in the first rank and d in

the second rank, you would write n=>d=>Float. There’s still a distinction

between regular functions (which use ->) and arrays (which use =>) but this

is mostly in the name of simplifying the frontend parser and preventing total

confusion from skim reading. An example function in Dex6 that

calculates all pairwise distances would be

pairwiseL1 :: n=>d=>Real -> n=>n=>Real

pairwiseL1 x = for i j.sum (for k. abs (x.i.k - x.j.k))The function body makes use of 2 for loops but doesn’t specify what set is being

iterated over. So how does this code compile? Implicitly, by using k to index

the second rank of the array, the compiler can infer that k must iterate over

the set of d. Likewise, i and j are used in the first rank and hence must

be iterators over n. Here, n and d aren’t treated as integers by the type

system. Instead, they’re abstract type parameters, which again brings up the

analogy to parametric polymorphic functions in a language like Haskell:

id :: a -> aa function like this in Haskell derives its type by performing type substitution

at the function call level. Hence id only gets a concrete type when applied:

x :: Int

x = id 2 -- OK: passes type-checkThis is what it looks like to bake array semantics more directly into the type system, but this isn’t the only way.

ND-Arrays as Type Extensions

The previous example with Dex showed off that, by treating NDarrays as analogous to functions, you can bake the rank and sizes directly into the type system through abstract type variables. That’s not the only way to do it however; Futhark is a another research language but from the DIKU instead of Google Research. Futhark’s research goals are entirely based on the compiler itself, so keeping the language simple was a strong preference. Consequently, size types were added to the language as a bolted-on extension to their Hindley–Milner type system. What that means is that Futhark has an exceptional syntax and logic path reserved just for size types.

Consider the relevant example of matrix multiplication. We know that any implementation of matrix multiplication must enforce certain limitations on the input and output matrices. Namely, the rows of the first matrix and columns of the second must have matching sizes, or else the algorithm doesn’t work. Hence we already know ahead of time that

It would be neat if we can check and enforce this property in our code right? Here’s an example type signature of a matrix multiplication implementation in Futhark:

val matmul [n][m][p] : (x: [n][m]f32) -> (y: [m][p]f32) -> [n][p]f32matmul is parametric in its type signature according to the size types n,

m and p. NDarrays are expressed through square brackets, while the type

stored in the array is limited to a subset of numerical types to preserve the

compiler’s ability to produce efficient code. This allows for a balance between

expressive language constructs and efficient, simple codegen from the compiler.

In the following code:

val xss : [10][5]f32

val yss : [5][20]f32

let zss = matmul mss yssthe value zss has infered type [10][20]f32 thanks to the type system, which

is incredible! This power should not be understated: Practically none of the

popular DSLs for NDarray computations can do this level of type inference; not

PyTorch,

TensorFlow,

NumPy or

even statically-compiled polymorphic libraries like

Eigen can express this

level of type inference.

What’s so great about this type inference strategy is that it has many knock-on

effects that cascade downwards. Since zss has a well-defined type that

includes its shape, any code dependent on that variable can further propagate

its type onto new expressions and so on.

Theory Will Only Take You so Far

If exposing the sizes of the tensor ranks through the type system sounds like a great idea, why doesn’t every language do it? The C++ example of using Variadic Generics should act as a useful hint: Type systems - even ones from modern languages - haven’t gained enough complexity to warrant implementing such a powerfully dynamic ability into a strongly typed language

The experienced reader might start to take notice that practically every popular array programing language out there is specifically designed around an extremely dynamic type system. Matlab, APL, NumPy (which inherits its semantics from Python) and many others are all dynamically typed in the sense that a variable

xcan change its type during the course of its lexical lifetime.

Over the Horizon With C++23 and <mdspan>

With our newfound context of ND-arrays, we can finally turn our attention to

(and appreciate) the new <mdspan> from C++23’s STL. It began life in the

Kokkos Project to pass non-owning views into

multi-dimensional array structures, but has now been adopted by the C++ standard

library team in an effort to standardize the vocabulary and create more code

reuse.

Here’s a simple example of the library in action. We’ve allocated heap memory

being managed by the input and output variables and are containerizing them

as mdspan array views so we can pass them to a parallelized algorithm:

std::mdspan A{input, N, M, O};

std::mdspan B{output, N, M, O};

auto v = stdv::catesian_product(

stdv::iota(1, A.extent(0) - 1),

stdv::iota(1, A.extent(1) - 1),

stdv::iota(1, A.extent(2) - 1)

);

std::for_each(ex::par_unseq,

begin(v), end(v),

[=] (auto idx) {

auto [i, j, k] = idx;

B[i, j, k] = ( A[i, j, k-1] +

A[i-1, j, k] +

A[i, j-1, k] + A[i, j, k] + A[i, j+1, k]

+ A[i+1, j, k]

+ A[i, j, k+1]

) / 7.0

}

);Notice how the kernel passed to std::for_each didn’t manually encode any

information about the data’s layout or sizes. Indeed, you can parameterize the

algorithm to pass any object that implements the Iterable

concept.7

We never specified in the defintion of A or B the strides of each dimension.

mdspan arrays have multiple overloaded constructors to allow for sane defaults

avoiding verboseness. One of those defaults is the assumption of a right-aligned

memory layout (i.e. row-major, the default in C/C++ arrays). What if we instead

recieved our arrays from Fortran through the FFI and need to encode the

different stride layout? We just need to use a different constructor - one that

takes an Extents object instead. Luckily, left/right aligned memory layouts

are supper common and so have simplified constructors in the standard library:

auto layout = std::layout_left::mapping{N, M, O};

std::mdspan A{input, layout};

std::mdspan b{output, layout};

This means the encoding of the memory layout is a property of the data that’s checked at runtime (or compile-time in some cases), neither assumed in the algorithm nor passed in at the function boundary layer. This prevents bugs, reduces verbosity and simplifies the kernel all at once. Not bad, eh?

There’s still a glaring limitation with mdspan though, not imposed by the

library itself but rather by the C++ language as a whole. To see this take a

look at 3 different definitions of an extents variable representing a rank 2

array with shape (16, 32):

std::extents<std::dynamic_extent, std::dynamic_extent> e1{16, 32};

std::extents<16, 32> e3;

std::extents<16, std::dynamic_extent> e4{32};As you can see, for extents objects who’s extents are not known at compile-time,

their extent’s type is generalized to dynamic_extent and never recaptured at

runtime. Since C++ can’t use runtime variables in templated code (recall that

templated code is quite literally copy-pasted code generation). Unless C++

extends its type system to allow dependent types (or at the very least expose

dynamic values at the type signature level) we will never be able to write a

compile-time safe matrix multiplication function without external

checks/type-guards.

Footnotes

-

According to Sammet’s “Programming Languages - History and Fundementals” on page 143,

The earliest significant document that seems to exist 1s one marked “PRELIMINARY REPORT, Specifications for the IBM Mathematical FORmula TRANslating System, FORTRAN”, dated November 10, 1954 and issued by the Programming Research Group, Applied Science Division, of IBM.

For more information you can read John Backus’ paper on the matter. ↩

-

The J project has maintained what can only be described as a textual museum of the people involved in APL ↩

-

Even Fortran added it beginning with its 1977 spec! ↩

-

It’s called the coulomb matrix for its use in computing the electromagnetic attraction of 2 objects, but in reality this subroutine is useful in computing any general inverse square law, including Newtonian gravity. Inverse square laws are more than mysterious enough to earn themselves their own blog post, but one step at a time ↩

-

Imagine you had an n-dimensional array of elements. If you knew the full set of indices for every dimension, you can treat the accessor to the array as a multi-parameter function of the form . ↩

-

This example is taken courtesy from this Futhark blog post. ↩

-

Of course, you would still need to ensure that the

Iterableobject returned a 3-tuple index per iteration and that it only spans a subset of the array itself. In other words, bounds-checking isn’t enforced at runtime so it’s still the responsability of the engineer. ↩